Don't Share Data among Threads

How to proceed when shared data are needed?

Distribution of a task among several threads means horizontal scaling - the more computing resources (processors) the less time to work the task out. Sharing data among threads brings the need to synchronize which kills the scaling capability of the computing.

Is there a way out?

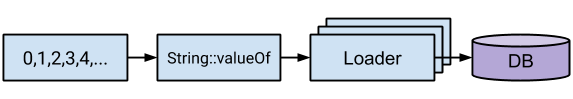

Consider a very simple ETL system where the Extractor produces a finite sequence of numbers, the Transformer converts numbers into strings, and the Loader finally saves the string into a database. Because the database access is expensive, the Loader works in batches: first, collect data up to a limit batch size, then write the whole batch into the database. To use resources efficiently the Loader runs in multiple threads.

And here comes trouble: shared data is introduced (batch collection) and methods must be synchronized. The result is almost the same or event worse than using a single thread without synchronization.

| Single thread, unsafe | 1 thread, sync | 2 threads, sync | 4 threads, sync |

|---|---|---|---|

| 51.446 ms | 50.768 ms | 51.083 ms | 51.674 ms |

Some optimization to minimize the size of the critical section are practicable, but not always. How to avoid synchronization as much as possible?

Thread Own Data

One approach is to let each thread to own its data. Such data doesn't have to be synchronized. The drawback is a design-shift of the Loader from a simple implementation to a threading-aware one:

class BatchLoaderThreadOwnData {

private final int batchSize;

private final JdbcTemplate jdbcTemplate;

private boolean finished = false;

// the object holds a map of data for each thread

// the only one concurrent access, therefore synchronized

private final Map<Long, ThreadOwnData> threadOwnDataMap = new ConcurrentHashMap<>();

public void load(String result) {

threadOwnData().resultsBatch.add(result);

if (finished || threadOwnData().counter++ >= batchSize) {

batchLoad(threadOwnData());

}

}

public void finish() {

finished = true;

threadOwnDataMap.values().forEach(this::batchLoad);

}

private ThreadOwnData threadOwnData() {

return threadOwnDataMap.computeIfAbsent(

Thread.currentThread().getId(),

id -> new ThreadOwnData(batchSize));

}

// ...

}

The results are actually much better:

| Single thread, unsafe | 1 thread, own data | 2 threads, own data | 4 threads, own data |

|---|---|---|---|

| 51.446 ms | 52.129 ms | 29.668 ms | 20.050 ms |

Instance per Thread

We can go a step further and let a tread own the whole object. This allows us to reuse the first thread-unsafe version just as with a single thread. The drawback is a complicated execution logic and the need to create new instances, which is not always possible:

// the only synchronization here

Map<Long, BatchLoaderUnsafe> batchLoaders = new ConcurrentHashMap<>();

// ...

// run in an async executor:

BatchLoaderUnsafe loader = batchLoaders.computeIfAbsent(

Thread.currentThread().getId(),

id -> new BatchLoaderUnsafe(BATCH_SIZE, jdbcTemplate));

// call it on an unsynchronized thread-owned loader

loader.load(i);

The results are, as expected, comparable to the previous solution:

| Single thread, unsafe | 1 thread, thread own | 2 threads, thread own | 4 threads, thread own |

|---|---|---|---|

| 51.446 ms | 51.919 ms | 30.051 ms | 19.814 ms |

Conclusion

In the modern world of microservices and distributed computing is scalability one of the most important attributes. Techniques like immutability are not always applicable, but synchronization must still be reduced to minimum. When not done so, the performance could be even worse when running in a single thread as threading overheads must be paid.

When it is not possible to create a new worker instance for a thread, the worker could be designed to create a new instance of data per thread. When every thread owns its data, there is no need for further synchronization.

The whole code could be found in my GitHub.

Happy threading!