Function Separation in a Microservice

Talking about serverless microservices, functions are the basic building blocks of the service functionality. How to design them from the code and deployment perspektive?

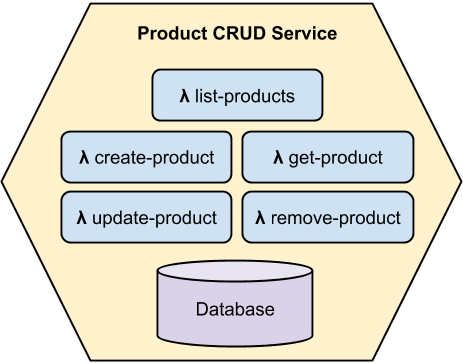

Microservices Anatomy

Let's simply define a microservice as an autonom service built from one or more resources. The resource mean functionality (functions*), storage (database), communication (message topic), etc. All the service needs must be included in the stack or given as a parameter (environment variable), API is exposed and besides are all the resource private for the microservice. One microservice ⇔ one stack ⇔ one deployment pipeline.

According the Single Responsibility Principle a component should do one and only one thing. Applying this rule to the functions level we get a function for an action. Let's consider a simple CRUD microservice; all the CRUD operation are represented by simple functions:

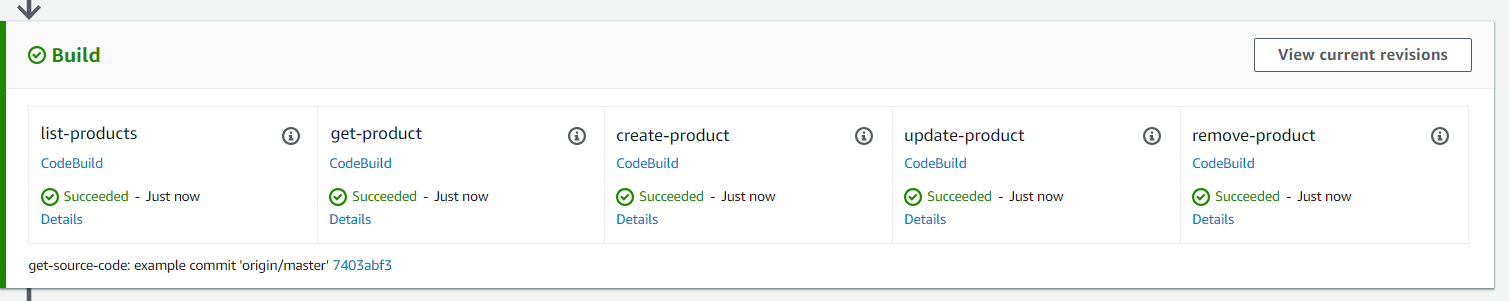

How does this look like from the dev-ops point of view? We can create a new project artifact for every function. Such code is easy to reason about, easy to test and deploy. The last one could be written with a question mark, of course it's easy to deploy a function, but don't forget there must be pipeline actions for build, deploy and test execution which is really only doing, but it's a strenuous and boring job and brings additional complexity.

Similar for the codebase. Take a look at this simple AWS Lambda function in Node.js:

.gitignore gruntfile.js jasmine.json package.json package-lock.json README.md

As you can see there several files only to set up the module. This must be multiplied for every function.

So, a function for an action sounds well in theory but in practise it could be an overkill.

Modularization 'till the last step

Decoupling is propably the most important concept in the design of software systems. So we never surrender this principle. Anyway we can distinguish between logical and physical coupling. Two modules can be perfectly decoupled even while physically existing in a same artifact - when they have no shared code and dependencies only to APIs (following principles of hexagonal architecture).

Let's consider the following implementation of the CRUD microservice:

src/ index.js list-products.js get-product.js create-product.js update-product.js remove-product.js

index.js

const list = require('./list-products').handler

const get = require('./get-product').handler

const create = require('./create-product').handler

const update = require('./update-product').handler

const remove = require('./remove-product').handler

exports.handler = async function(event) {

if (!event || !event.resource || !event.httpMethod) {

return buildResponse(400, {error: 'Wrong request format.'})

}

if (event.resource === '/') {

switch (event.httpMethod) {

case 'GET':

return await list(event)

case 'POST':

return await create(event)

default:

return buildResponse(405, {error: 'Wrong request method.'})

}

}

else if (event.resource === '/{id}') {

switch (event.httpMethod) {

case 'GET':

return await get(event)

case 'PUT':

return await update(event)

case 'DELETE':

return await remove(event)

default:

return buildResponse(405, {error: 'Wrong request method.'})

}

} else {

return buildResponse(404, {error: 'Resource does not exist.'})

}

}

function buildResponse(statusCode, data = null) {

return {

isBase64Encoded: false,

statusCode: statusCode,

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify(data)

}

}

As you can see, the main handler is only a router to other functions, which look like following:

get-product.js

const AWS = require('aws-sdk')

const PRODUCT_TABLE = process.env.PRODUCT_TABLE

const dynamoDb = new AWS.DynamoDB.DocumentClient({apiVersion: '2012-08-10'})

exports.handler = async function(event) {

if (!event || !event.httpMethod) {

return buildResponse(400, {error: 'Wrong request format.'})

}

if (event.httpMethod !== 'GET') {

return buildResponse(405, {error: 'Wrong request method - only GET supported.'})

}

if (!event.pathParameters || !event.pathParameters.id) {

return buildResponse(400, {error: 'Wrong request - parameter product ID must be set.'})

}

try {

const response = await dispatch(event.pathParameters.id)

if (response) {

return buildResponse(200, response)

} else {

return buildResponse(404, {error: 'Product was not found.'})

}

} catch (ex) {

console.error('ERROR', JSON.stringify(ex))

}

}

async function dispatch(id) {

const product = getProduct(id)

return product

}

async function getProduct(id) {

const params = {

TableName: PRODUCT_TABLE,

Key: { productId: id }

}

const res = await dynamoDb.get(params).promise()

return (res.Item)

? { id: res.Item.productId,

name: res.Item.name,

description: res.Item.description,

price: res.Item.price }

: null

}

function buildResponse(statusCode, data = null) {

return {

isBase64Encoded: false,

statusCode: statusCode,

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify(data)

}

}

The Single Responsibility Principle is applied here. The function has its own handler which takes care of the incoming HTTP request. All the HTTP-related checks and transformations must be done here. The handler is the only one place for such a dirty code. After the request is checked, dispatching is executed. This is something like a main method, an entry point to the business logic. In this case just getting the product details.

And other functions in the same spirit...

The actions are decoupled from each other and are ready to be separated into individual functions in the next last step. But staying here we still have a very clear code easy to test and reason about and we don't need to adapt the pipeline onto this level of granularity.

Test it!

It's very easy to test a service built in this way. We write a Unit Test Suite for every action (exported function), an Integration Test Suite for the service API (index.js) and End-to-End Test Suite for the Gateway API facade.

Source Code

The whole stack could be found on GitHub.

Happy coding!

* Functions are sometimes called "nanoservices" as they are smaller than microservices. Actually, I don't even like the term "microservice" because it confuses people regarding its size ("How small should a microservice be?"), so I don't like to develop this terminology on in the same manner.