WebAssembly Serverless Computing from Scratch

Humble proof of concept of a serverless computing platform in Node.js based on the idea of nanoprocesses.

WebAssembly (Wasm) describes a memory-safe, sandboxed execution environment. Wasm modules are pieces of bytecode that can be written in and compiled from a bright scale of programming languages (C/C++, Rust, Go, AssemblyScript, and many others).

These characteristics make Wasm an ideal candidate for an open and very lightweight serverless computing platform.

Nanoprocesses coined in the Bytecode Alliance announcement are a theoretical concept of very lightweight sandboxed fibers spawned and running within a single operating system (OS) process. Nanoprocesses are not real OS processes so they don’t come with their expensive overheads yet are sandboxed and isolated from each other.

There are already production-ready Wasm serverless computing solutions such as WasmEdge, wasmcloud, and Krustlet but they do not seem to have implemented the concept of nanoprocesses so far. Atmo is a promising project that has been on the right track.

Our aim is, however, to explore the concept by implementing a simple working solution from scratch.

Requirements

Our Wasm platform should meet all standard requirements on today’s serverless computing platforms such as AWS Lambda:

- No operational overheads

- Pay as you go

- Run in isolation

- Resource limiting

- Scalability

- High availability

As Wasm is sandboxed per default, we get a good level of isolation for free. The platform must take care of the rest.

Wasm Modules

To simplify things to the maximum, we will write Wasm modules in Wasm text format (WAT). Our modules are simple enough to do that but any language will work as WebAssembly is a portable binary format.

Consider these three Wat files:

;; answer.wat

(module

(func (export "_start")

(result i32)

i32.const 42

return))

;; sum.wat

(module

(func (export "_start")

(param $a i32)

(param $b i32)

(result i32)

local.get $a

local.get $b

i32.add

return))

;; sleep.wat

(module

(import "platform" "now" (func $now (result f64)))

(func (export "_start")

(local $target i64)

i64.const 3000

(i64.trunc_f64_s (call $now))

i64.add

local.set $target

loop $loop

(i64.trunc_f64_s (call $now))

local.get $target

i64.lt_s

br_if $loop

end))

The first module returns the number 42 as the ultimate answer, the second returns the sum of two integers, and finally, the third module blocks execution for three seconds (active waiting).

We can compile Wat files to Wasm with the WebAssembly Binary Toolkit:

$ wat2wasm answer.wat -o answer.wasm

Wasm in Node.js

Our modules come with no dependencies except the sleep module which requires a function to get the actual time in milliseconds.

We import all the platform dependencies via an import object platform:

// run.js

const fs = require('fs');

WebAssembly.instantiate(

fs.readFileSync(process.argv[2]),

{

platform: {

now: Date.now

}

}

).then(({instance: {exports: wasm}}) => {

const params = process.argv.slice(3);

const res = wasm._start(...params);

console.log(res);

});

We can then run any module with Node.js:

$ node run.js sum.wasm 5 2 7

We will reuse this code later as a Wasm executor in the platform.

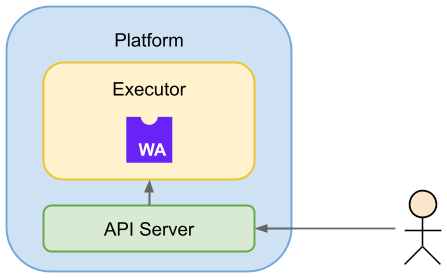

Platform API

We will use a very simple HTTP server with the following endpoints:

/register/<module>- registers a module to the platform/exec/<module>[?param1[,param2[...]]]- executes a registered module/stats/<module>- module execution stats (consumed time, etc.)

Thread Worker Execution

In Node.js, Wasm modules are executed synchronously in the single program thread:

This means that we cannot execute multiple modules in parallel which could lead to higher latency on a single node. Every request must wait until the previous one ends:

$ time curl http://localhost:8000/exec/sleep & 3.048s $ time curl http://localhost:8000/exec/sleep & 5.986s

Another problem with this approach is that we cannot really control the Wasm execution as the execution flow is overtaken by the Wasm module.

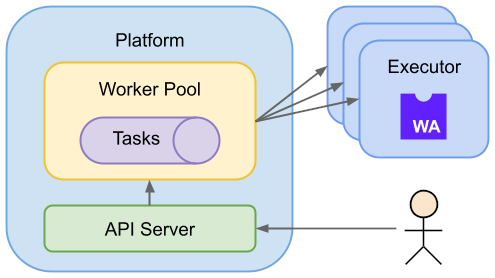

The good news is we can solve this problem with worker threads. We will maintain a worker thread pool of reusable threads and execute modules on idle workers:

Now, multiple modules can be executed in parallel without blocking:

$ time curl http://localhost:8000/exec/sleep & 3.042s $ time curl http://localhost:8000/exec/sleep & 3.039s

Cold Start Optimization

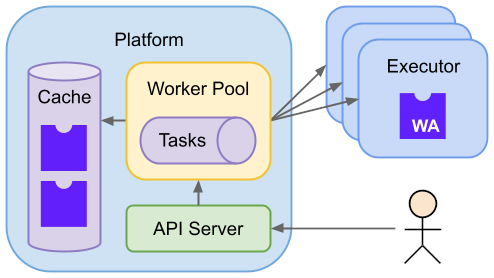

The initial downloading/loading of the Wasm file could take a while. This is called a cold start and it is good practice to avoid it for frequently executed modules.

We can optimize cold starts by introducing caching of already executed modules. Module binaries would be kept in the memory for an amount of time. Modules executed within this period will avoid cold starts:

Consider cache eviction after one second. When the same module execution is requested less than one second after the last one, the latency will be slightly improved:

$ curl http://localhost:8000/exec/sleep & # after less than a second: $ curl http://localhost:8000/exec/sleep & $ curl http://localhost:8000/stats/sleep execution time: 6061ms $ curl http://localhost:8000/exec/sleep # after more than a second: $ curl http://localhost:8000/exec/sleep $ curl http://localhost:8000/stats/sleep execution time: 6093ms

We saved some time on the cold start and time means money in the pay-as-you-go pricing model!

In Node.js, one cannot, unfortunately, share functions among threads. Therefore it is not possible to cache loaded and initialized Wasm module which would otherwise be a great performance improvement.

Resource Limits

It is very easy to limit memory usage for a Wasm module simply by importing a demanded amount of memory into the module:

await WebAssembly.instantiate(wasmBuffer, {

...importObject,

memory: new WebAssembly.Memory({initial: PAGES})

});

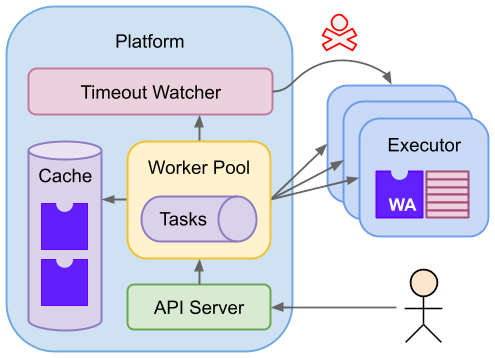

At the time of writing, Wasm is single-threaded, so we don’t have to worry about consuming CPU cores. However, we should definitely limit the consumption of CPU time by introducing execution timeouts:

Execution Timeout

Because we don’t control the Wasm code directly there is nothing to prevent malicious users from writing a Wasm module with an infinite loop that eventually gets the platform stuck:

;; stuck.wat

(module

(func (export "_start")

loop $loop

br $loop

end))

We should include some kind of timeout mechanism that terminates the execution after an arbitrary duration has been reached.

As far as there is no way to interrupt a running Wasm instance there is not much we can do about execution timeout. We can think of implementing timeout checks directly into the virtual machine (VM) but this would be quite an exhausting effort.

The one option left is to terminate the executing worker:

setTimeout(() => worker.terminate(),

EXECUTION_TIMEOUT_MS);

So we can limit execution resources without using any advanced technology such as containers (cgroups).

Needless to say, even containers are not the recommended way to run untrusted code as they don’t really provide 100% isolation. If security is an issue, a VM-based solution should be considered instead.

Conclusion

Our platform is up and running. How far did we get? Let’s see if we could fulfill all requirements:

| Requirement | Solution | Achieved |

|---|---|---|

| No operational overheads | Portable Wasm modules | yes |

| Pay as you go | Execution time measurements | yes |

| Run in isolation | Linear memory, Wasm VM sandbox | yes |

| Resource limiting | Linear memory, single-threaded execution, timeouts | yes |

| Scalability | Worker pool | yes |

| High availability | Multiple instances of the platform possible | yes |

It seems that all the goals were achieved or are at least possible to achieve. By using Wasm modules as the computing function format we gain a lot of features for free. Many of them like isolation would otherwise be hard to get without using some advanced technology such as containers.

Moreover, platform maintenance is extremely easy because we don’t have to consider multiple runtimes in order to support different programming languages.

WebAssembly universal bytecode format has a bright future in computing platforms and we will definitely see many of these around very soon.

Source code (barely 300 lines) can be found on my GitHub.

Happy computing!